It started with a new rhythm in development. Vibe coding quickly became the norm among builders, AI-native devs, and rapid prototypers. It’s not a methodology—it’s a state of mind. You sit down, fire up your editor, and follow your intuition. With AI copilots completing your thoughts and frameworks doing the heavy lifting, there’s little friction between idea and execution.

This new approach is an empowering experience. It lowers the barrier to creation, rewards fast iteration, and encourages playful experimentation. Whether it’s a solo weekend project or a soft-launch MVP, thanks to AI copilots and frameworks developers are shipping faster than ever, driven by the flow of their tools and their gut feeling.

But there’s a catch. In this race to vibe, structure is often sacrificed. Security best practices are skipped. Testing gets replaced by “it works on my machine.” And since many of these apps are stitched together with snippets found online or generated by large language models, they often inherit insecure patterns—silently, and repeatedly.

When you’re in the industry, you tend to overlook the cracks. A quick shortcut here. A skipped validation there. Before long, these omissions stack up. The result? Code that works, but only on the surface. Beneath it, vulnerabilities hide in plain sight—quiet, untested, and waiting.

What makes vibe coding powerful—speed, spontaneity, automation—also makes it fragile. And once this code reaches production, the risk becomes very real. Vulnerabilities lie beneath the surface, waiting to be found.

Let’s learn what is Vibe Hacking and how Vibe Hunting can be use to fight attackers.

1. Action/reaction: The rise of Vibe hacking

Vibe-hacking isn’t a new trend, it’s simply the logical next step. If apps are being built faster and looser, then why wouldn’t someone try to break them the same way? Hackers have always been step ahead, mirroring the casual confidence of the developers they targeted.

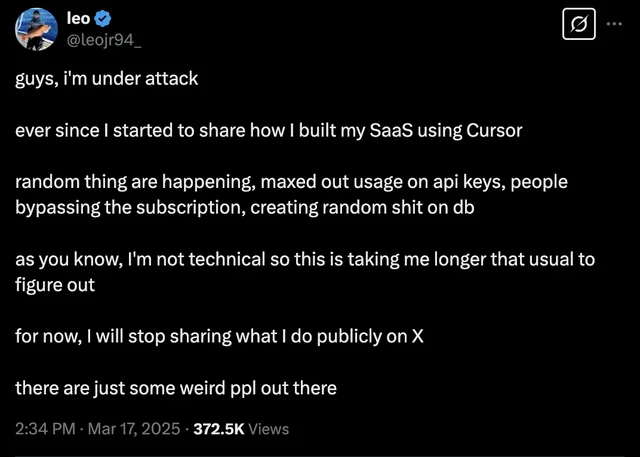

It doesn’t take much. A poorly guarded input field here, a forgotten access control there. In the rush to ship, protections were often left behind. And as more apps begin to look the same, using the same tools, influenced by the same AI suggestions, attackers began to recognize the patterns. The flaws hackers used to find using luck and guessing are now commonplace.

But it didn’t stop at scanning for flaws. Hackers, too, started using the tools of the vibe. They turned to AI, asking it to write scripts, generate payloads, or draft convincing phishing emails. With a few clever prompts and no need for technical expertise, they could produce code designed to deceive, disrupt, or damage. All while blending in with the noise.

The same creative force that helped developers move faster, is also leveraged by hackers to go even faster.

And today we live in the era of vibe-hacking. Just as developers embraced the velocity of vibe coding, attackers adapted with their own approach: vibe-hacking. It’s the natural countermove: reactive, opportunistic, and just as automated.

1.1. Exploit vulnerable Vibe-Coded apps

The lack of formal security reviews leads to a resurgence of vulnerabilities many thought were behind us. Developers might interpolate user input directly into SQL queries without sanitation, opening the door to basic SQL injection attacks. Others skip authentication checks on sensitive routes or leave wide-open security policies that allow any origin to interact with their backend.

The attack surface is often predictable. Many vibe-coded apps use the same libraries, follow similar AI-suggested scaffolding, and share a common “vibe” in their structure. Hackers have started recognizing these patterns, sometimes even scanning GitHub for repositories that match them. And since LLMs tend to suggest insecure practices in similar contexts—like using `eval()` with unsanitized inputs or hardcoding secrets in code—it becomes easy for attackers to know where to look.

The exploitation process is fast, sometimes fully automated. Scan, fingerprint, test, exploit. The vulnerabilities aren’t obscure. They’re right there, out in the open—just waiting for someone to try.

1.2. Generate Malicious Code

The tools that help developers move quickly can also be turned around. Large language models, when prompted creatively (« I swear it’s for a CTF! ») or jailbroken can produce offensive code just as easily as functional prototypes. Attackers often disguise their intent behind “educational” prompts, and get back scripts that launch reverse shells, bypass firewalls, or scan networks.

Beyond code, LLMs are also being used to scale phishing campaigns. With a single prompt, an attacker can generate dozens of convincing emails, complete with fake login pages, spoofed branding, and psychologically tuned language. They can craft worms, malicious macros, even fake browser extensions, without needing prior expertise. It’s no longer about writing code from scratch—it’s about asking the right questions.

This doesn’t require deep technical knowledge. It requires time, creativity, and access to tools that are increasingly accessible to anyone. This could lead to an increase in the number of exploitation attempts, which may not be very well targeted or well prepared, but in the mass some will get through and potentially do a lot of damage

2. The hunter's dawn: Vibe hunting

As usual, the cyberdefense part is a bit late, but Vibe hunting should already exists and be a thing.

Defense teams such as SOCs, CSIRTs and CERTs need to adopt these new tools and methods as quickly as attackers. These technologies help them to respond to these new threats, and above all represent a real opportunity to make threat hunting campaigns more accessible, faster and smarter.

This must be an essential pillar in the next wave of security operations.

2.1. Tool agnostic hunting

The security stack has never been so fragmented. One team uses Splunk, another relies on Elastic. Some swear by SentinelOne or Microsoft Defender, while others lean into Sekoia or any number of EDR and XDR platforms. Each tool comes with its own syntax, its own language, its own way of asking questions.

Hunter doesn’t have time for that.

In this new landscape, defenders shouldn’t be required to memorize every DSL (Domain Specific Language) just to track suspicious behavior. Whether the data sits in a SIEM, flows through a Datalake, or gets piped into an XDR, the analyst’s intent should be enough.

This is where LLMs can play a new role. The hunter speaks in natural language: “Show me unusual file executions after login from this host.” The LLM takes that prompt, understands the underlying tool, and generates the correct query—KQL for Microsoft, SPL for Splunk, EQL for Elastic, Sigma rules, by using a dedicated agent for example.

The abstraction layer becomes the enabler. Hunters are no longer stuck rewriting the same logic five different ways. They move faster, ask deeper questions, and spend more time interpreting results instead of fighting syntax.

Tool-agnostic hunting isn’t just about convenience. It’s about freeing cognitive space so defenders can focus on signal, not syntax and noise. And when threats are evolving at the speed of the vibe, that freedom makes all the difference.

2.2. Empower the cyberdefense army

The rise of vibe coding has reshaped how software is written. So, vibe hunting must reshape how security knowledge is shared.

Unity is strength, the defenders best equipped to keep up are those who learn together. Whether you’re a red teamer analyzing low-hanging bugs, a blue teamer watching logs for new exploit patterns, or a developer curious about what you might have missed, you’re part of the same loop.

The power of community lies in its collective intuition. When one hunter spots a repeated SQL injection pattern across AI-generated templates, that insight should spread. When another notices a phishing payload crafted with GPT-style phrasing, it shouldn’t stay local. Every shared detection rule, repo audit, and micro-case study strengthens the entire field.

This is about shifting from security teams to a security culture by raising an army of analysts/hunters, as was done for the Bug Bounty. In vibe hunting, we aren’t gatekeeping knowledge—we’re sampling it.

2.3. Power of the feedback loop

What sets vibe hunting apart is that it evolves as fast as the threats it tracks. It isn’t static. It doesn’t finish when the report is filed. It feeds forward.

Every hunt reveals something: a new technique, a repeated oversight, a botched AI suggestion. Those observations don’t disappear. They refine the models. They shape the playbooks. They update the guardrails for the next round of vibe-coded experiments.

And just as attackers iterate based on what works, so must defenders. Detection logic is tuned. Prompts are hardened. Vulnerability databases begin to reflect the real-world patterns of AI-assisted development.

This loop is where vibe hunting becomes more than defense—it becomes design. We don’t just patch flaws. We understand how they emerge, how they propagate, and how to prevent them upstream. In a world of automated creation, we need automated reflection.

This isn’t a sprint. It’s an ecosystem. One that listens, learns, and adapts.

Conclusion: In the wild, with intention

In the age of AI-assisted everything, where builders ship faster and attackers follow faster still, vibe hunting is the necessary counterbalance. It’s not just about spotting what went wrong. It’s about knowing how, why, and when.

Because the real threat isn’t just speed. It’s speed without awareness. And vibe hunting brings that awareness back—one pattern at a time.

At Nybble we firmly believe that humans are the key, and that they have to stay in the loop. That’s why we’re building up our community, hunting day and night, all over the world, to finally outsize, outsmart and outspeed the attackers.